Products and Draft Patents

AuditoryAI

The invention is an new process to register the existinance of sound objects,localize them, prioritize them, and direct the behaviour of an agent (e.g. a robot) towards them.

gazeTracking

The product endows intelligent system with one camera to infer the gaze direction by looking at the head orientation and the iris position.

-----------------------

------------------------

-------------------------

Testimonials

-------------------------------------------------

Prof. Samia Nefti Meziani/Robotics and Automation, Salford University

--------------------------------------------------

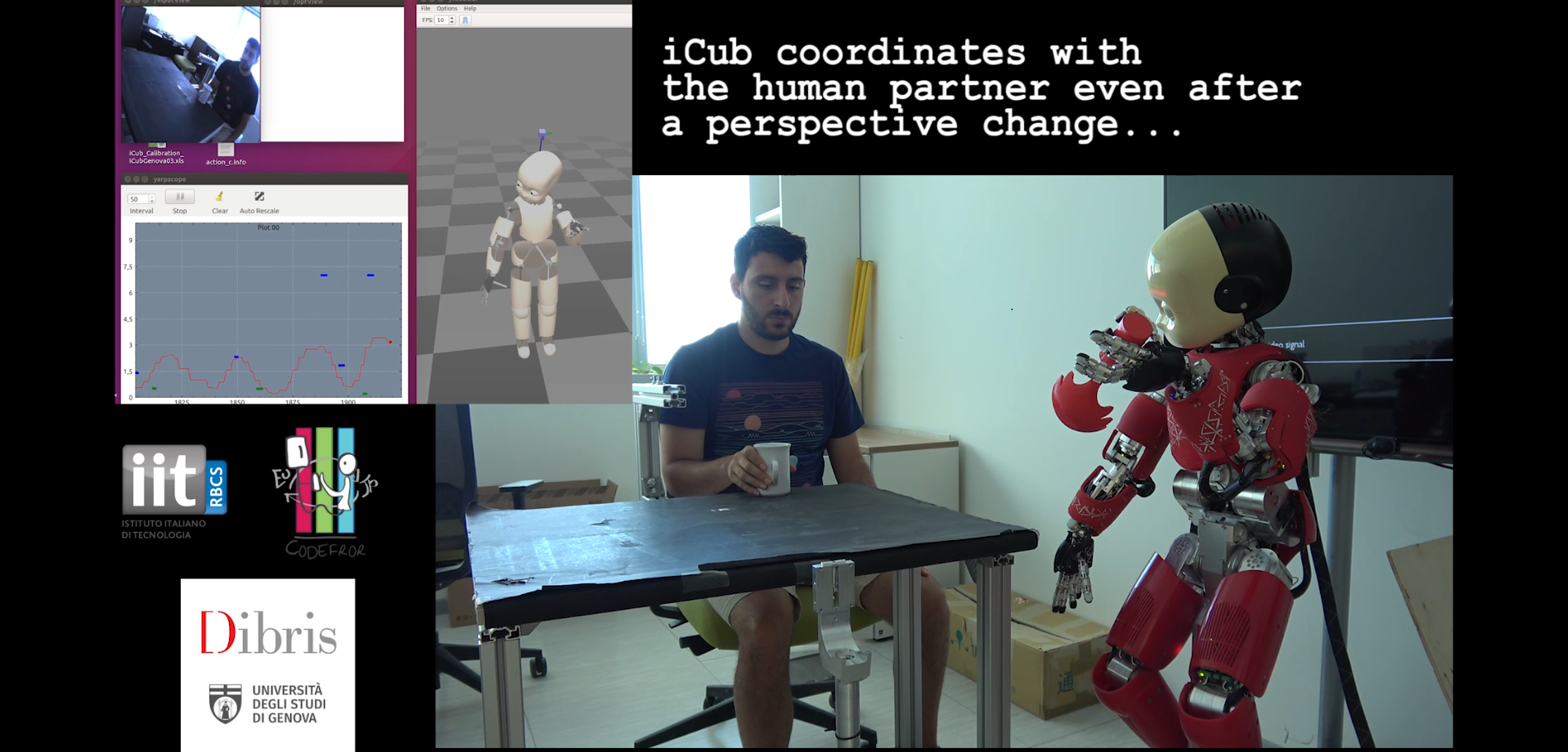

Prof. Davide Brugali/ Robotics, Universita degli Studi di Bergamo View Invariant Robot Adaptation to Human Action Timing

View Invariant Robot Adaptation to Human Action Timing

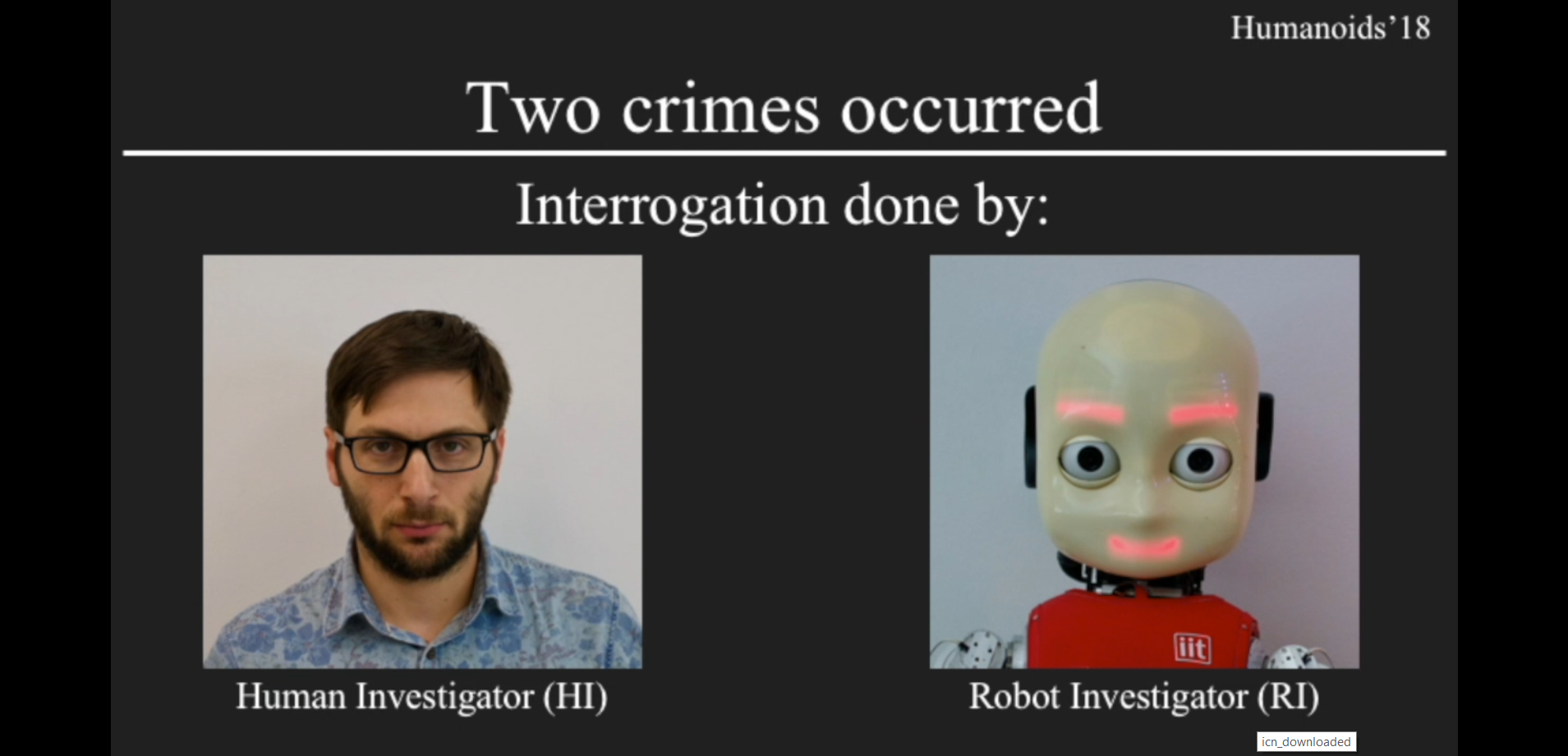

Can a Humanoid Robot Spot a Liar?

Can a Humanoid Robot Spot a Liar?

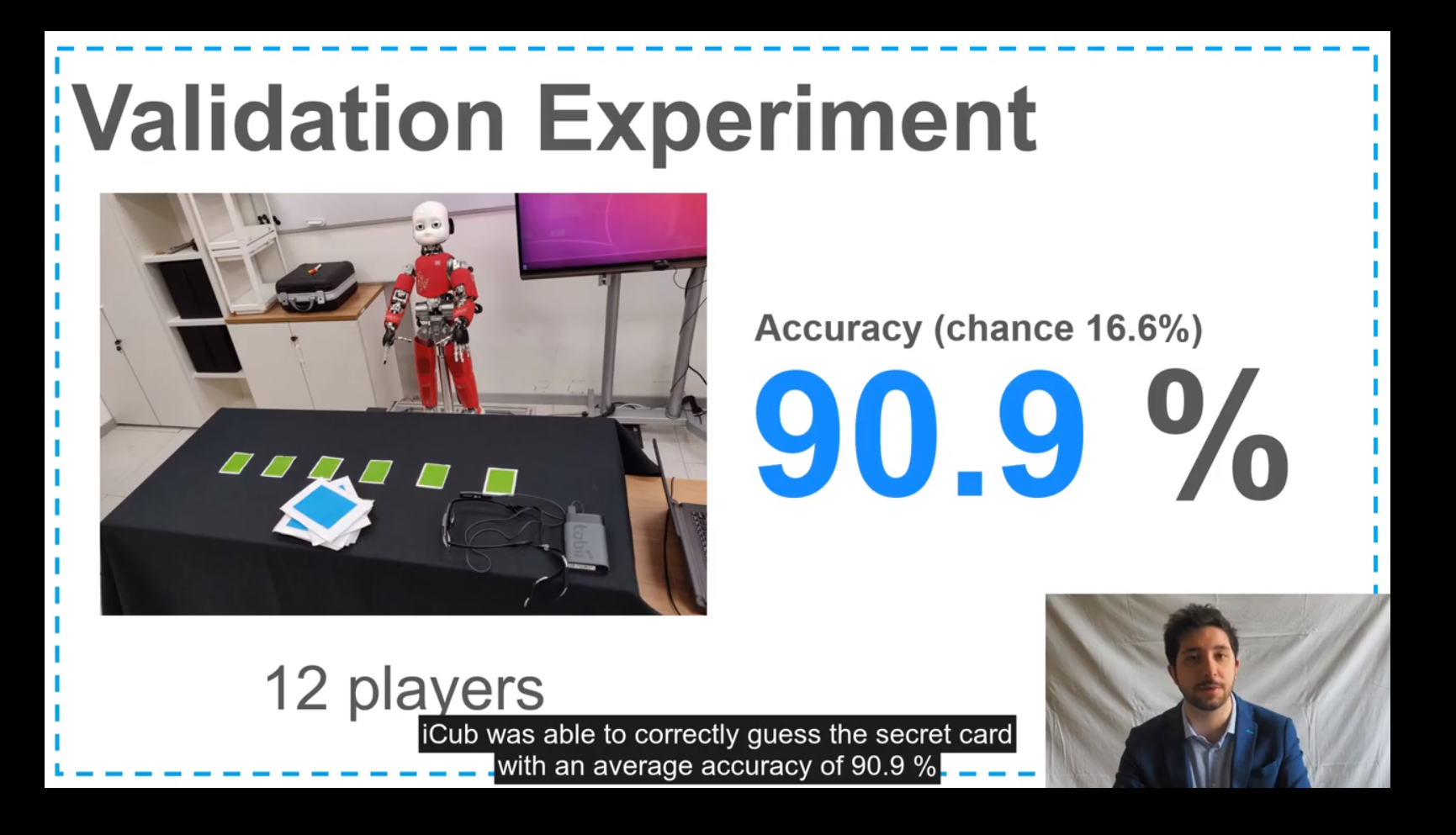

Do You See the Magic

Do You See the Magic

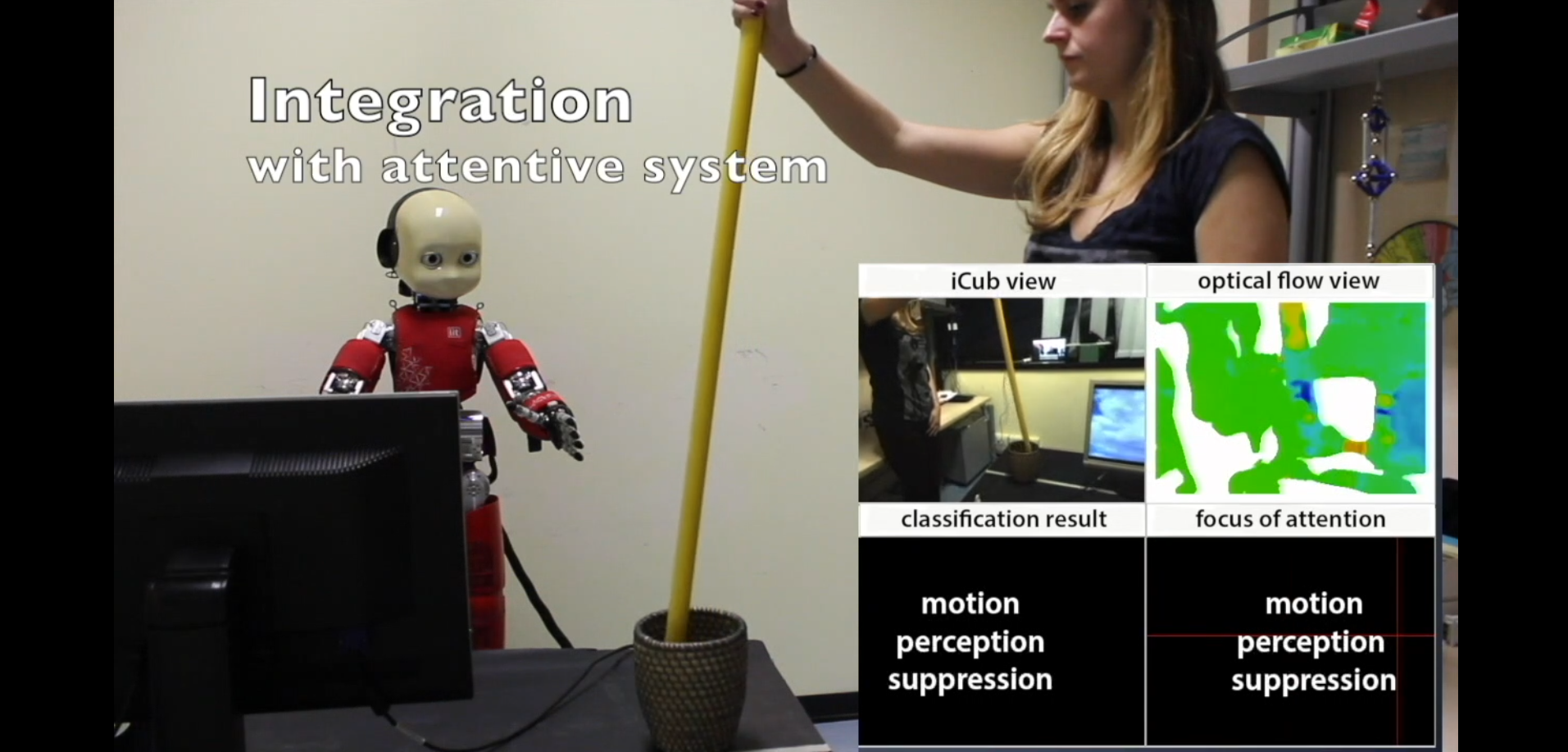

Biological movement detector enhances the attention skills of humanoid robot iCub

Biological movement detector enhances the attention skills of humanoid robot iCub